Performance Evaluation Domain

This article describes the Performance Evaluation Domain for the Teacher Preparation Data Model, provides background and rationale, and offers guidance for its application in the field.

Drivers for the Performance Evaluation Data Model

The TPDM Work Group identified the need to capture performance data about Teacher Candidates and Staff teachers to drive the analysis of Educator Preparation Program (EPP) effectiveness and improvement. The need spans capturing formal performance evaluations to more informal classroom observations.

The requirement is to support the following types of evaluations:

- By a supervisor, peer, coach, typically using a rubric

- Based upon a quantitative measure(s), like student growth or teacher attendance

- Based upon aggregated responses to a survey, like a student survey or peer survey

- Based upon ratings entered without the details of how it was derived

Performance Evaluation

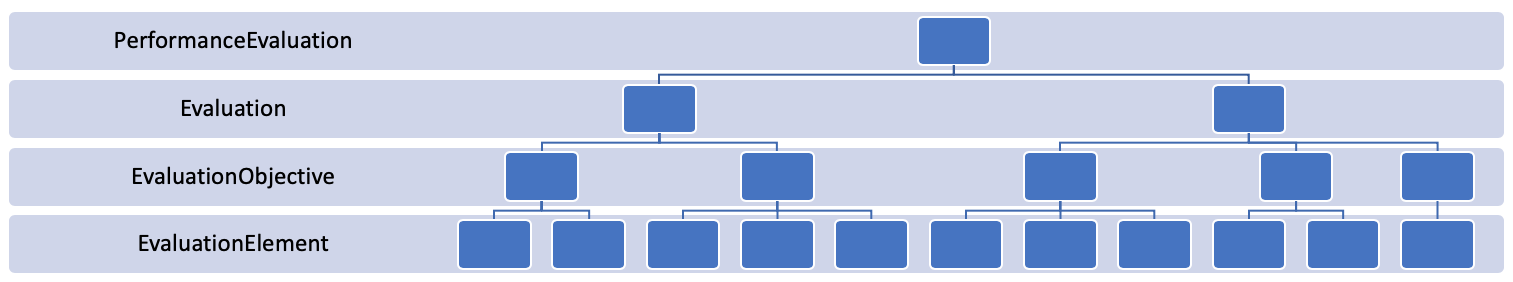

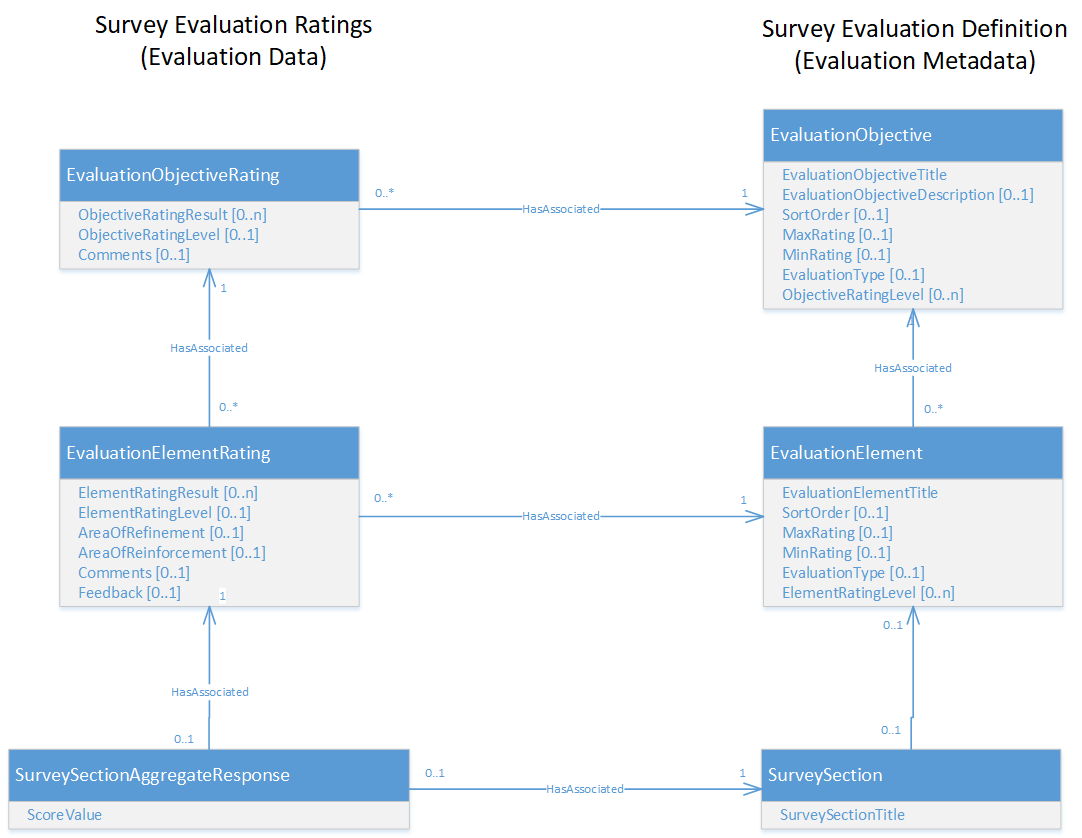

The Performance Evaluation Domain is organized as a four-level, hierarchical model as depicted below:

Figure 1. The Performance Evaluation model hierarchy

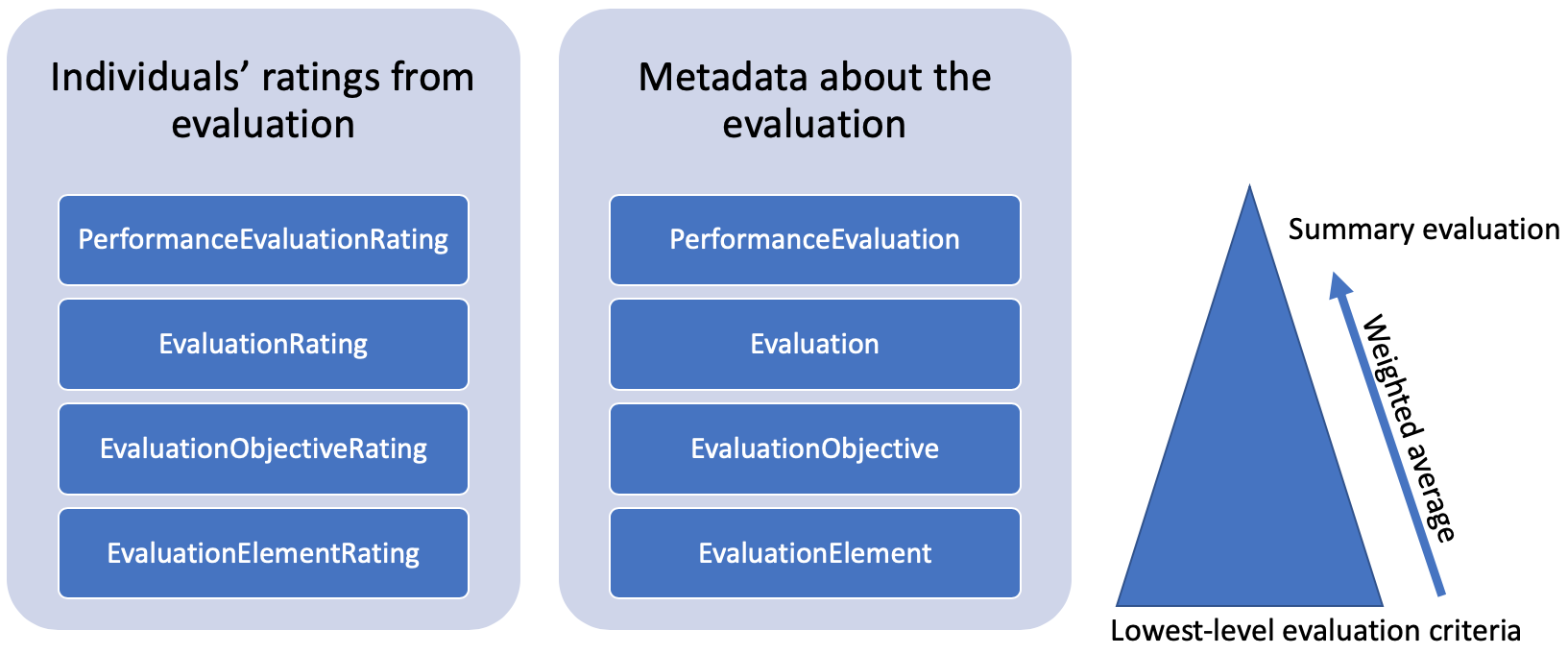

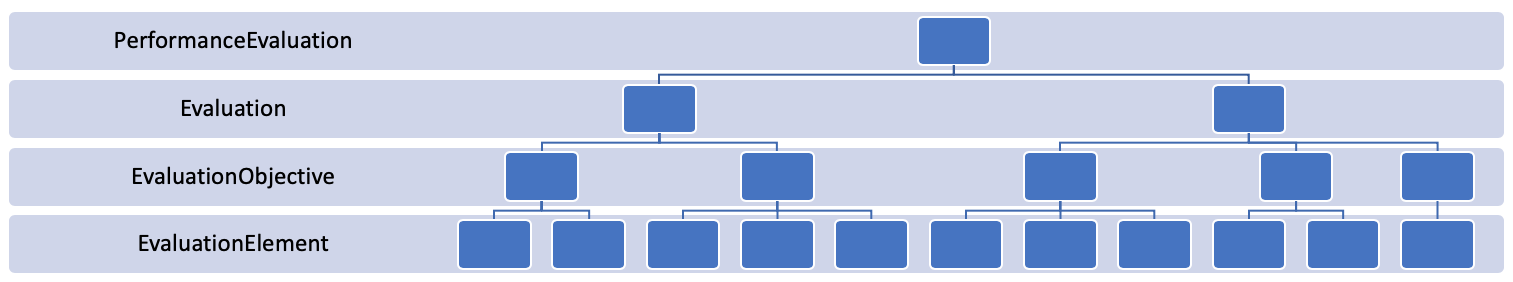

The model includes entities that describe the evaluation (i.e., the metadata) and the ratings for each component of the hierarchy for an individual. The model supports the weighted average of the various evaluation components as they averaged up the hierarchy.

Figure 2. Evaluation Rating and Metadata levels

The definitions for the Metadata levels (and associated Evaluation Rating levels) are as follows:

- Performance Evaluation. A performance evaluation of an educator, typically regularly scheduled and uniformly applied. Comprised of one or more Evaluations.

- Evaluation. An evaluation instrument applied to evaluate an educator. The evaluation could be internally developed or could be an industry-recognized instrument such as TTESS or Marzano.

- Evaluation Objective. A subcomponent of an Evaluation, a specific educator Objective, or domain of performance that is being evaluated. For example, the objectives for a teacher evaluation might include Planning and Preparation, Classroom Management, Delivery of Instruction, Communication, Professional Responsibilities, and so forth.

- Evaluation Element. The lowest-level Element or criterion of performance being evaluated by rubric, quantitative measure, or aggregate survey response. For example, the criteria for a Delivery of Instruction objective may include Elements like Organization, Clarity, Questioning, and Engagement.

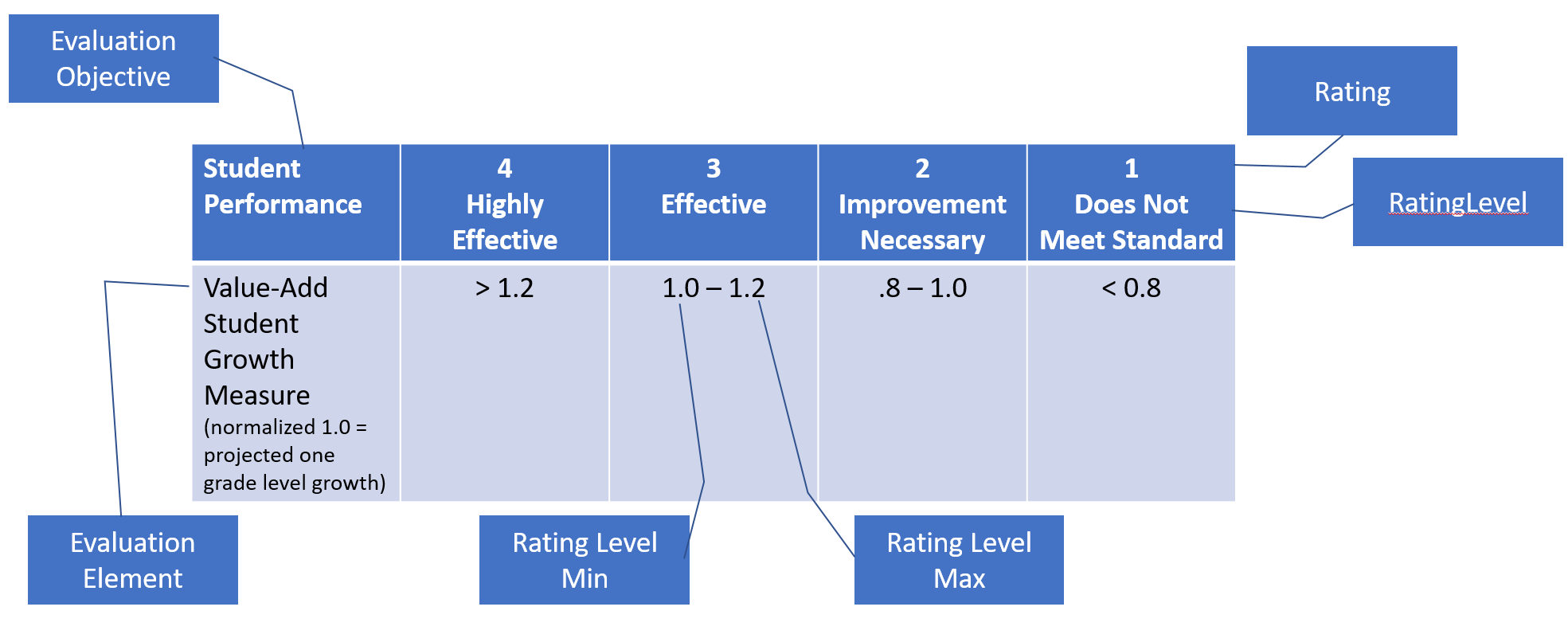

The evaluation of each Evaluation Element results in a Rating, for example, a five-point scale where 1 is poor and 5 is excellent. Weighting of criteria can be accomplished by having different rating scales.

Ratings may be labeled with a Rating Level, for example, 1: Does not Meet Standard, 2: Improvement Necessary, 3: Effective, 4: Highly Effective.

The model supports the following types of evaluations, which can be mixed and weighted together:

- Rubric

- Quantitative measure

- Aggregate response to a survey

- Evaluated without an (entered) criteria

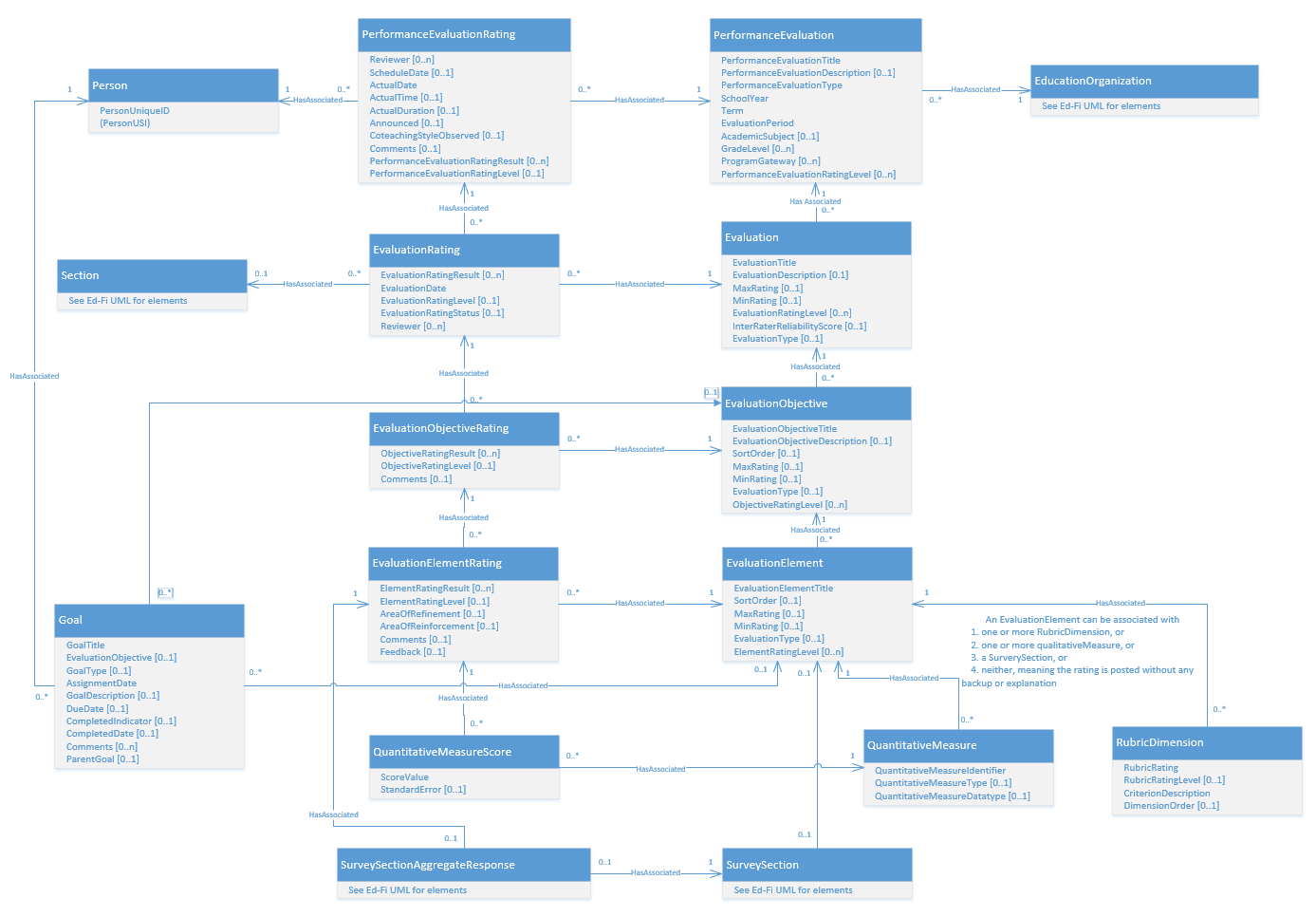

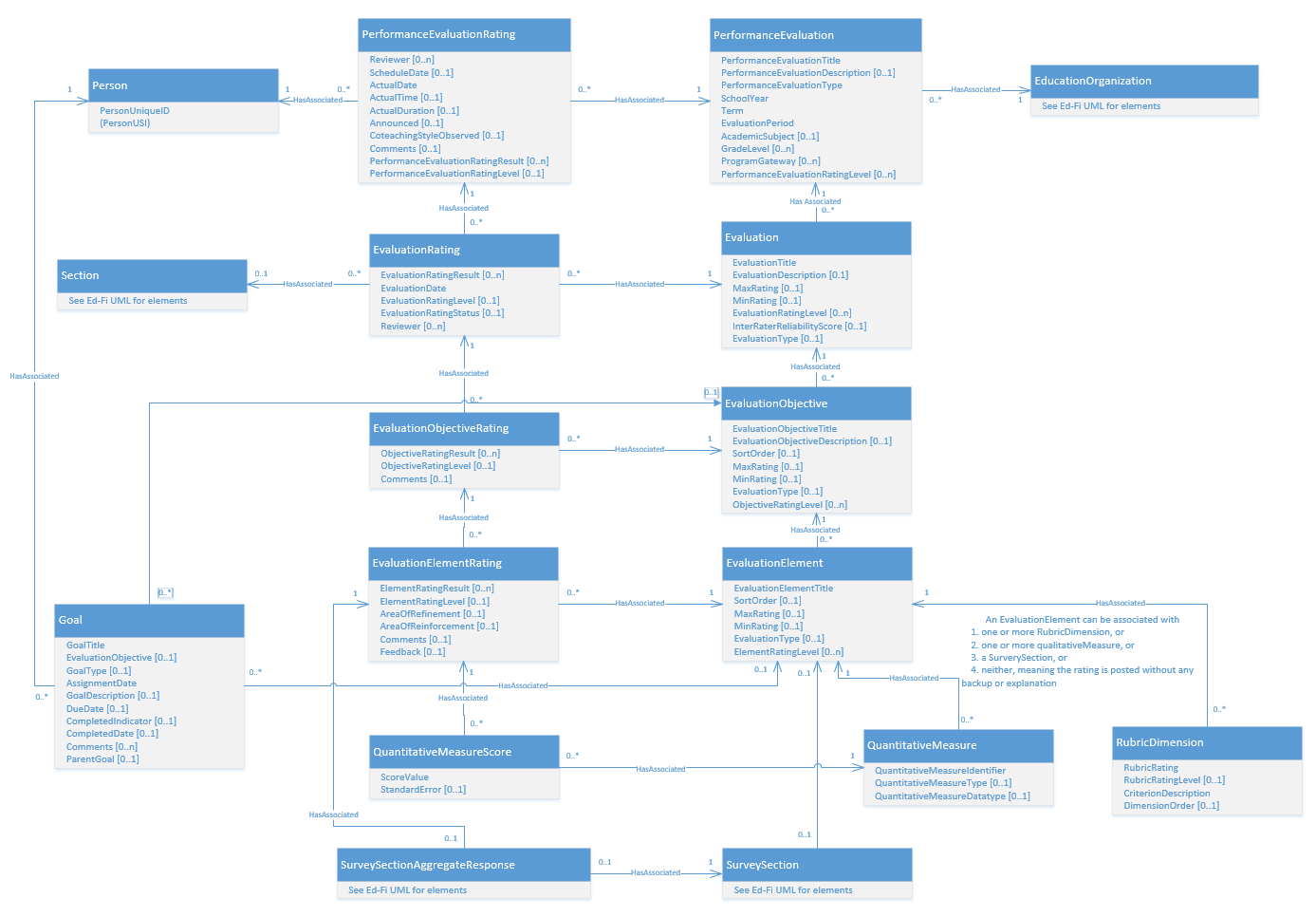

The PerformanceEvaluation model UML is shown below. Key items of note:

- PerformanceEvaluation entities are associated with Person, since Evaluations can be associated with the different person-role entities, specifically Staff, Candidate, and Student.

- The two columns of entities at center in the UML diagram reflect a Person’s evaluation ratings and the evaluation metadata, as described above.

- For educators who are evaluated across one or more Sections, an optional association is provided from EvaluationRating to Section.

- The entities at the bottom represent to basis for rating the EvaluationElement:

- Rubric is defined by RubricDimension with the rating in the EvaluationElementRating.

- Quantitative measure defined by QuantitiveMeasure and recorded in the QuantitativeMeasureScore.

- Survey response defined by the SurveySection (which indicates a collection of SurveyQuestions) with the responses tallied in the SurveySectionAggregateResponse.

- A Goal entity is defined to record and track performance goals related to an EvaluationElement.

Figure 3. The Performance Evaluation model

Using the Performance Evaluation Model

This section illustrates how the data model is used for the various types of evaluation.

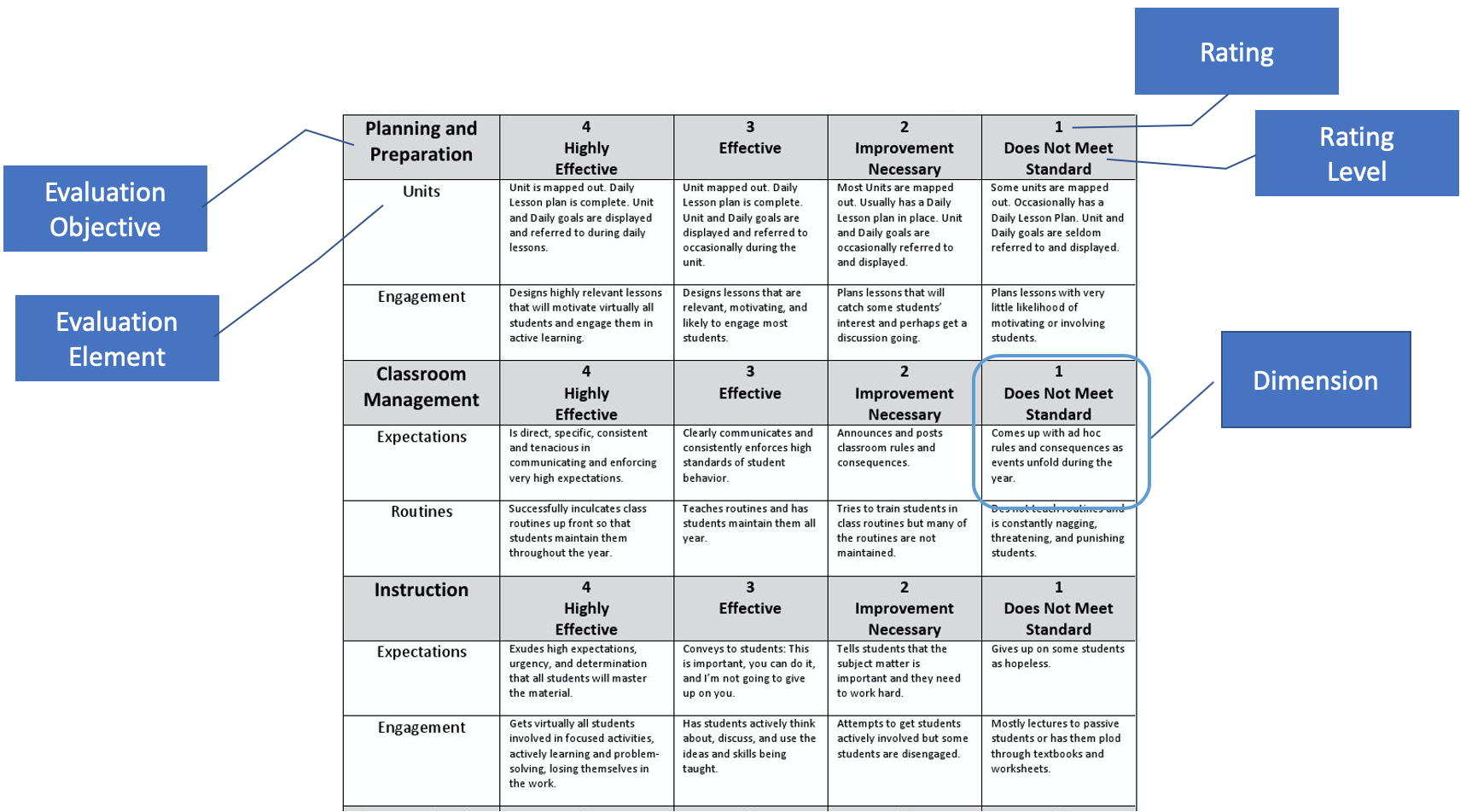

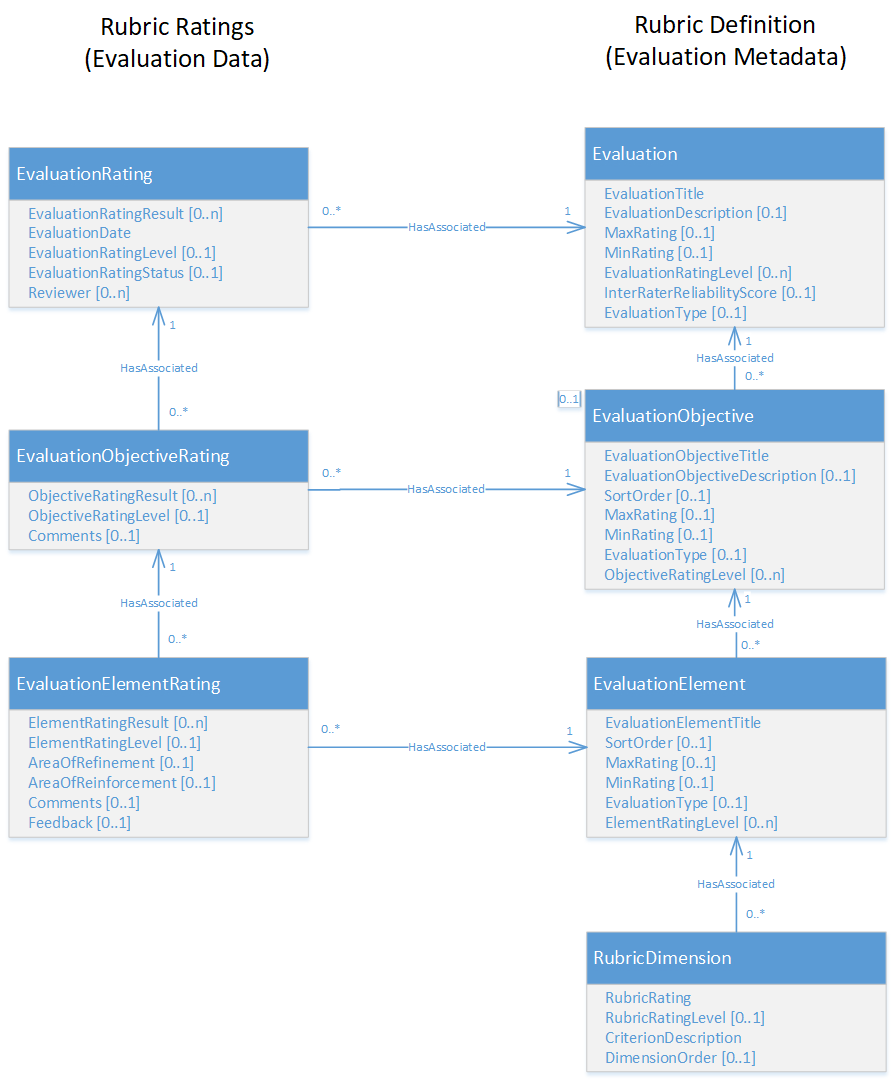

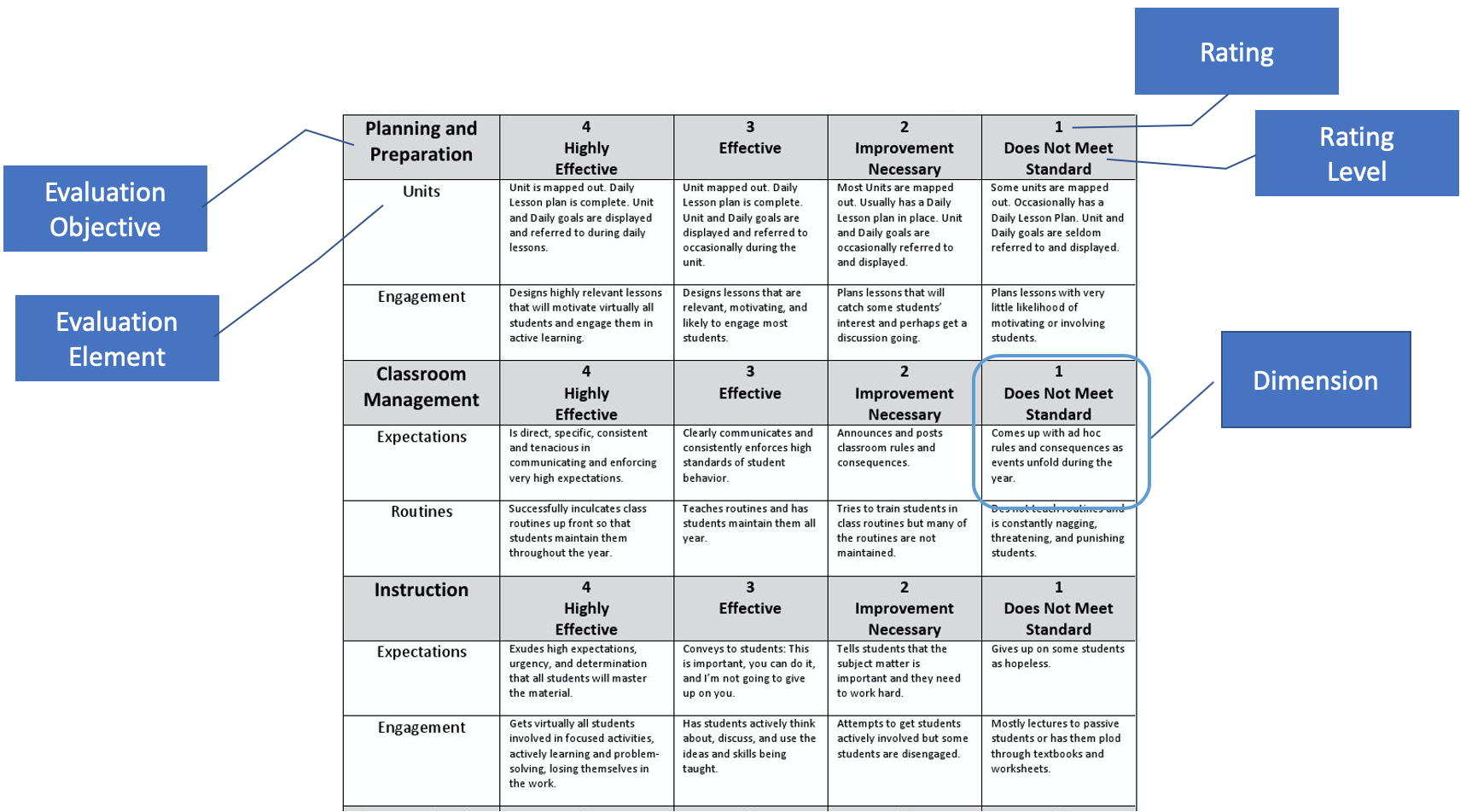

The following diagram illustrates how a rubric is mapped to the data model:

Figure 4. Rubric definition mapping

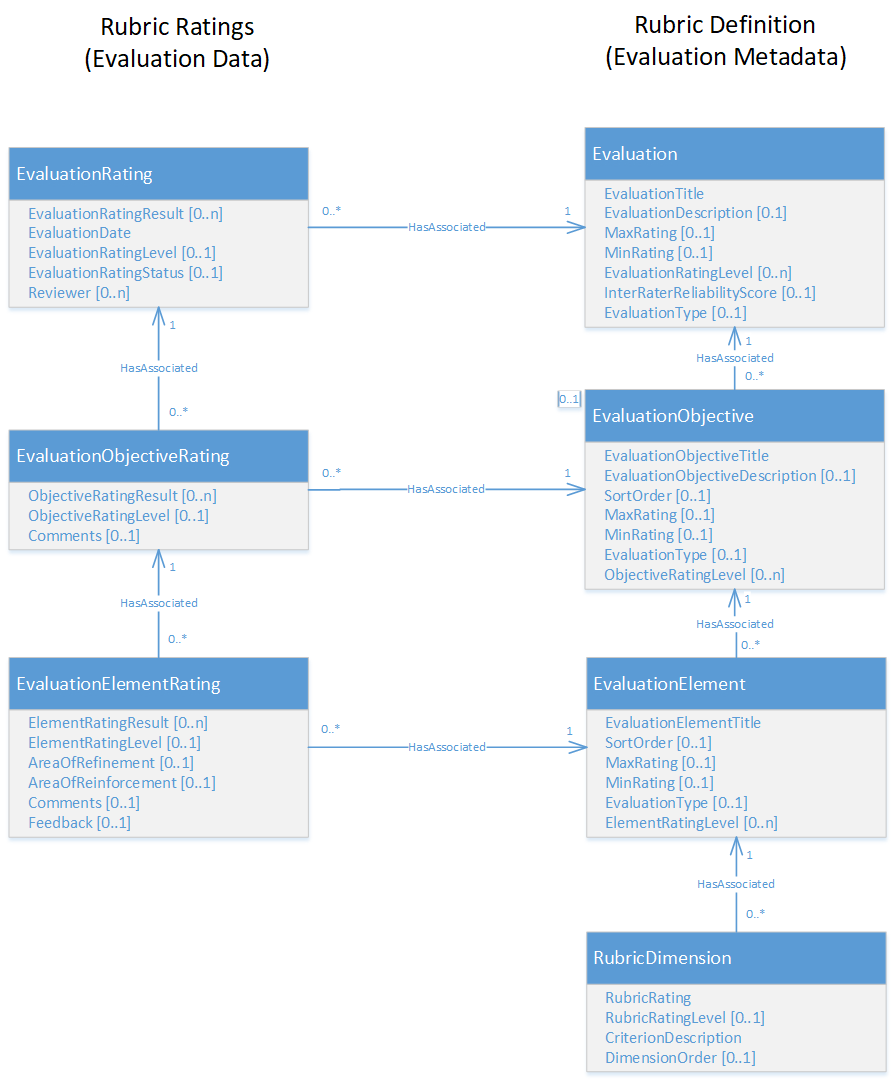

A rubric-based evaluation uses the following the entities:

Figure 5. Rubric-based Evaluation model

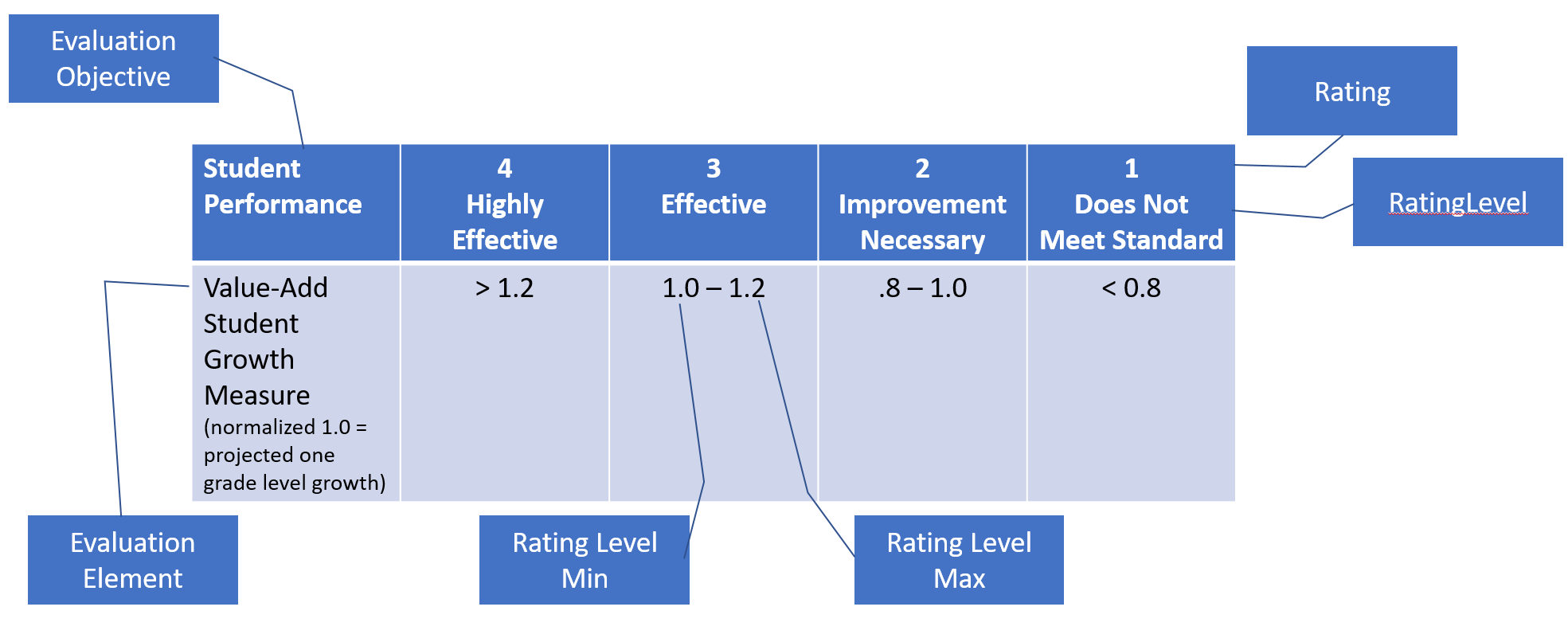

The following diagram illustrates how a quantitative measure, such as a Value-Add Student Growth Measure, is mapped into the data model:

Figure 6. Quantitative Measure mapping

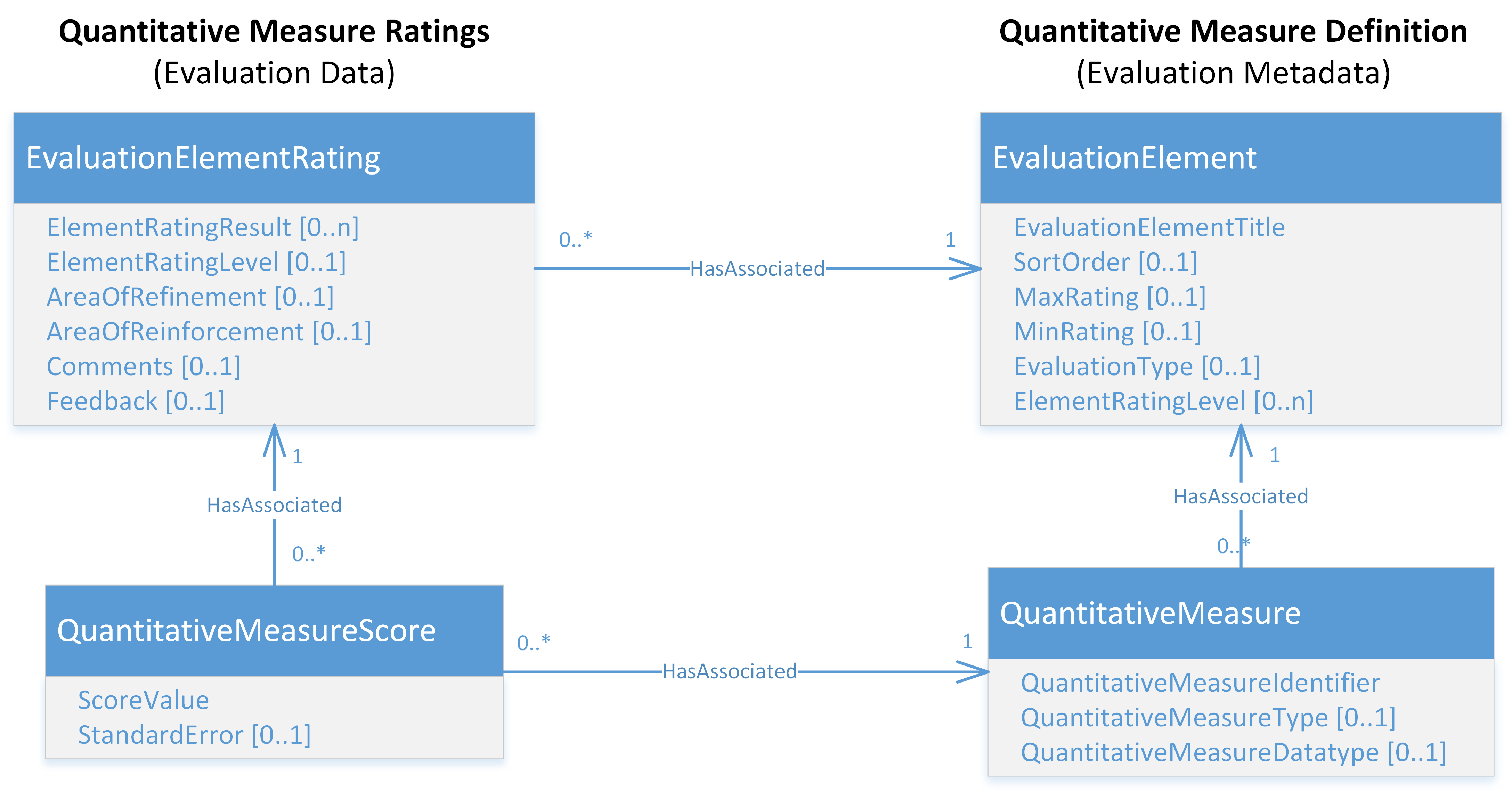

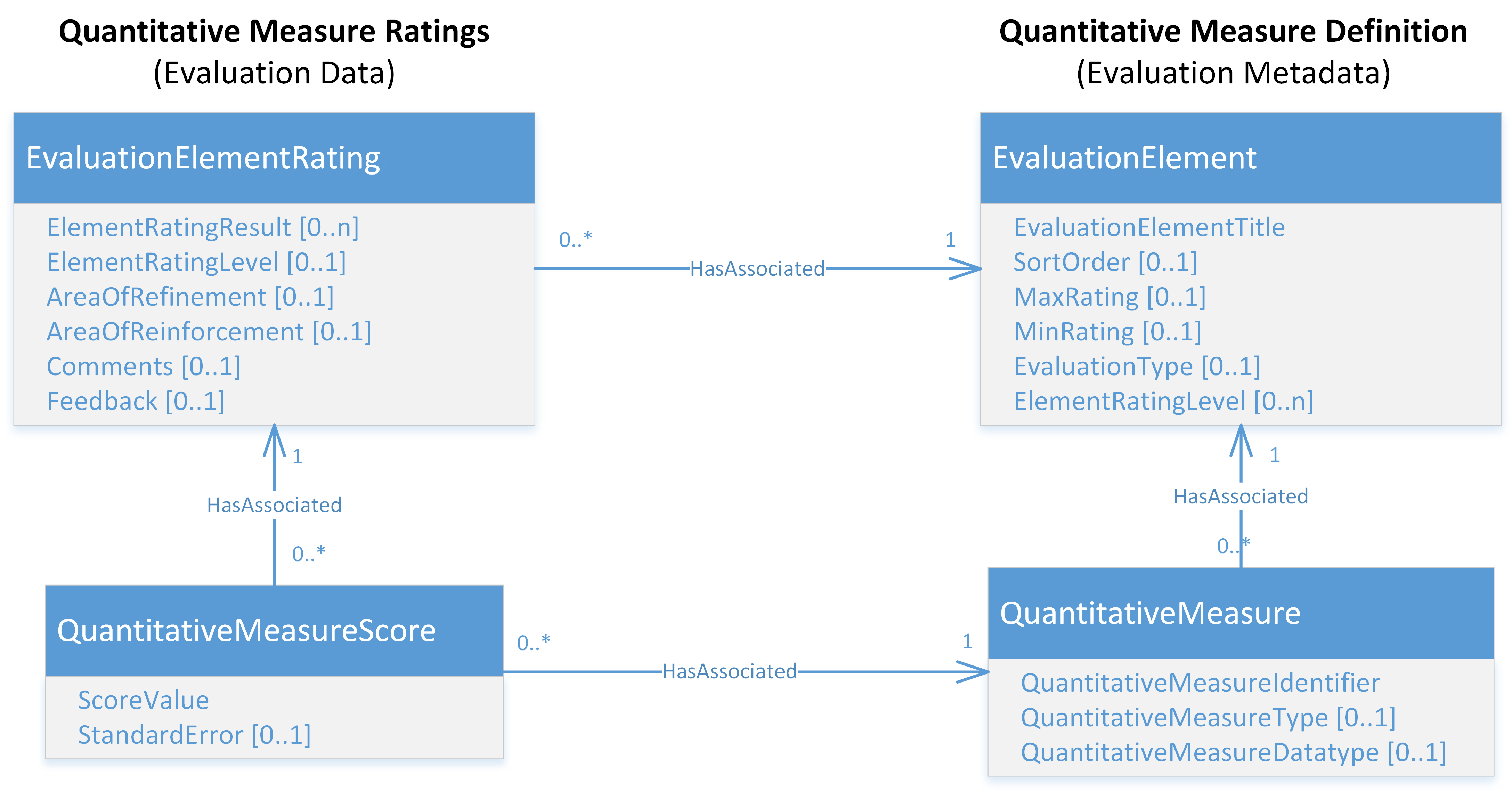

A quantitative measure uses the following the entities:

Figure 7. Quantitative Measure evaluation model

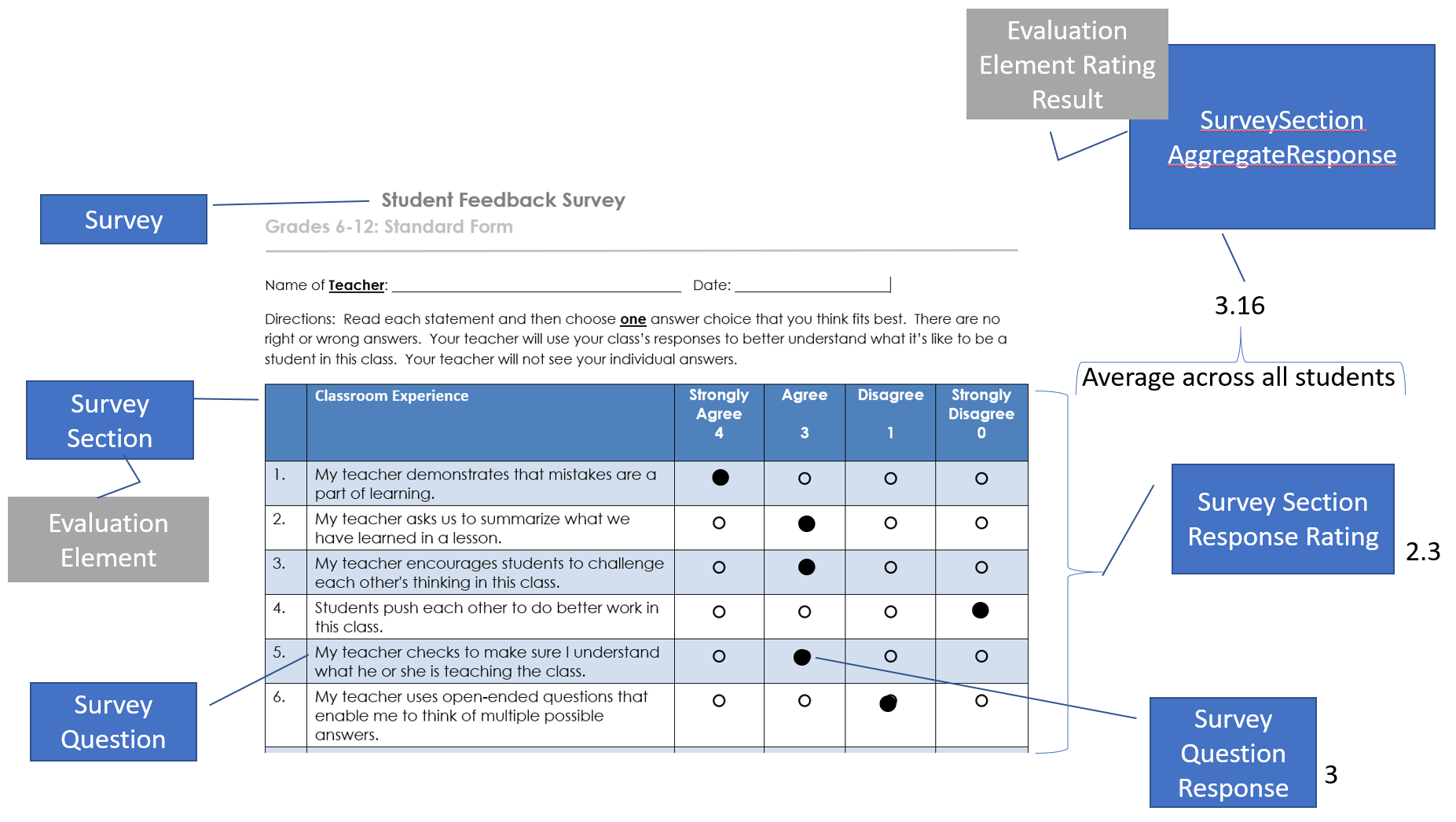

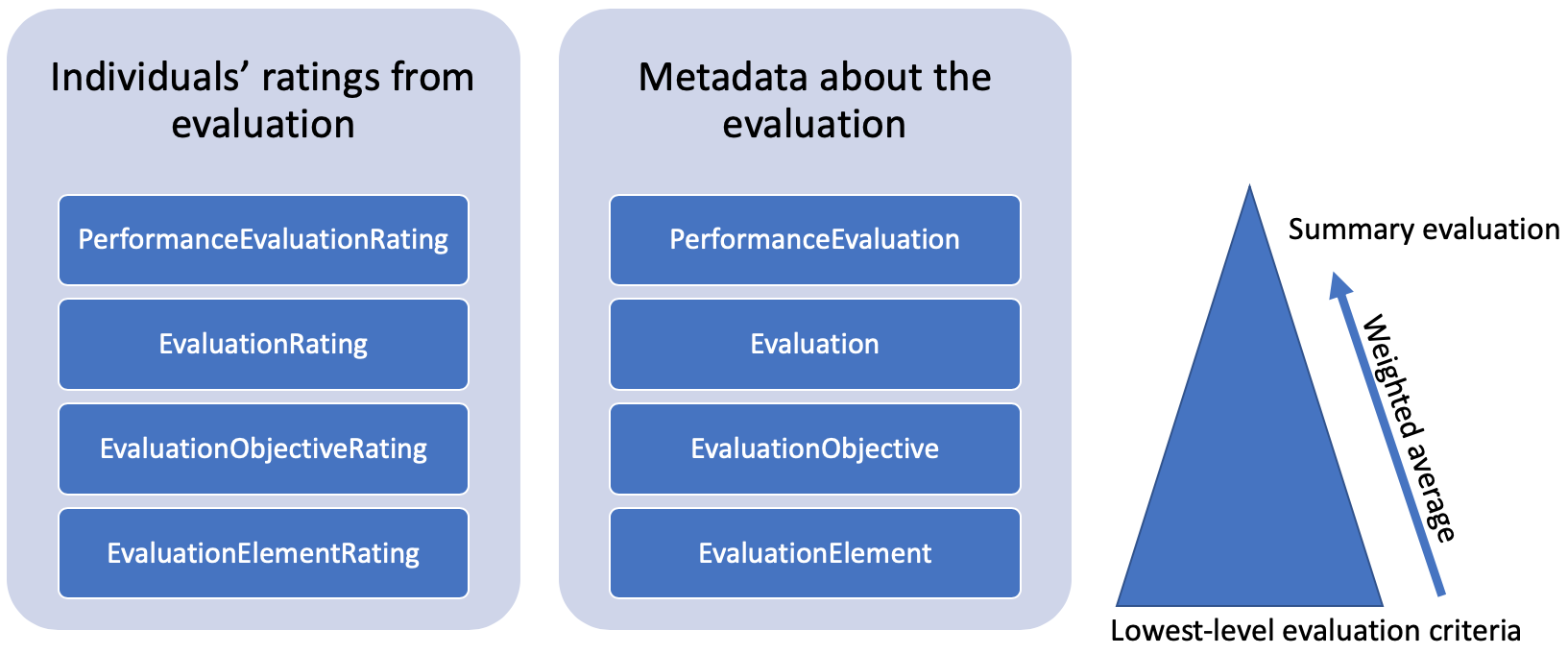

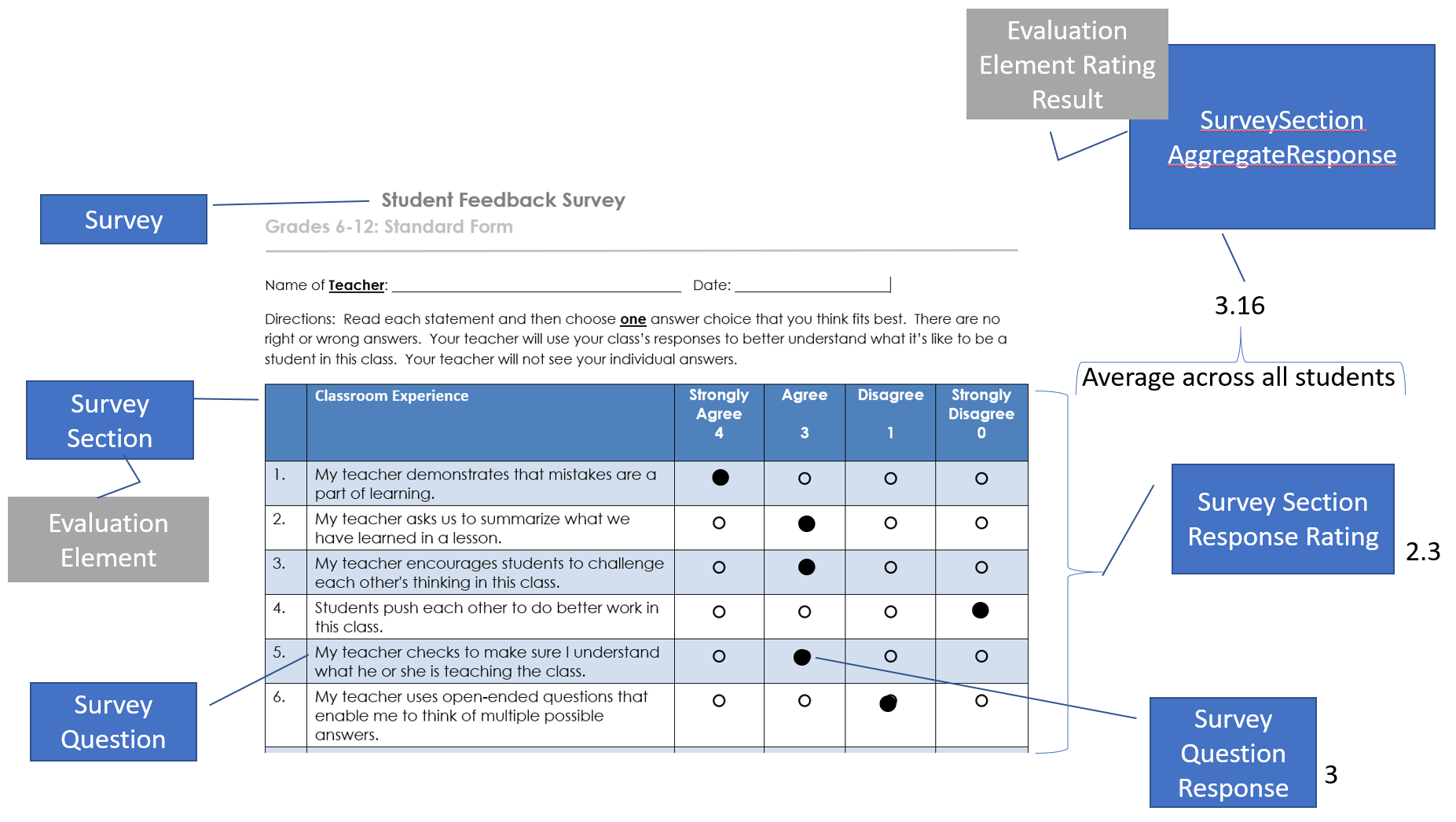

The following diagram illustrates how a survey response, such as a student survey, is mapped into the data model. Different Survey Sections would be mapped to different Evaluation Elements.

Figure 8. Survey Evaluation mapping

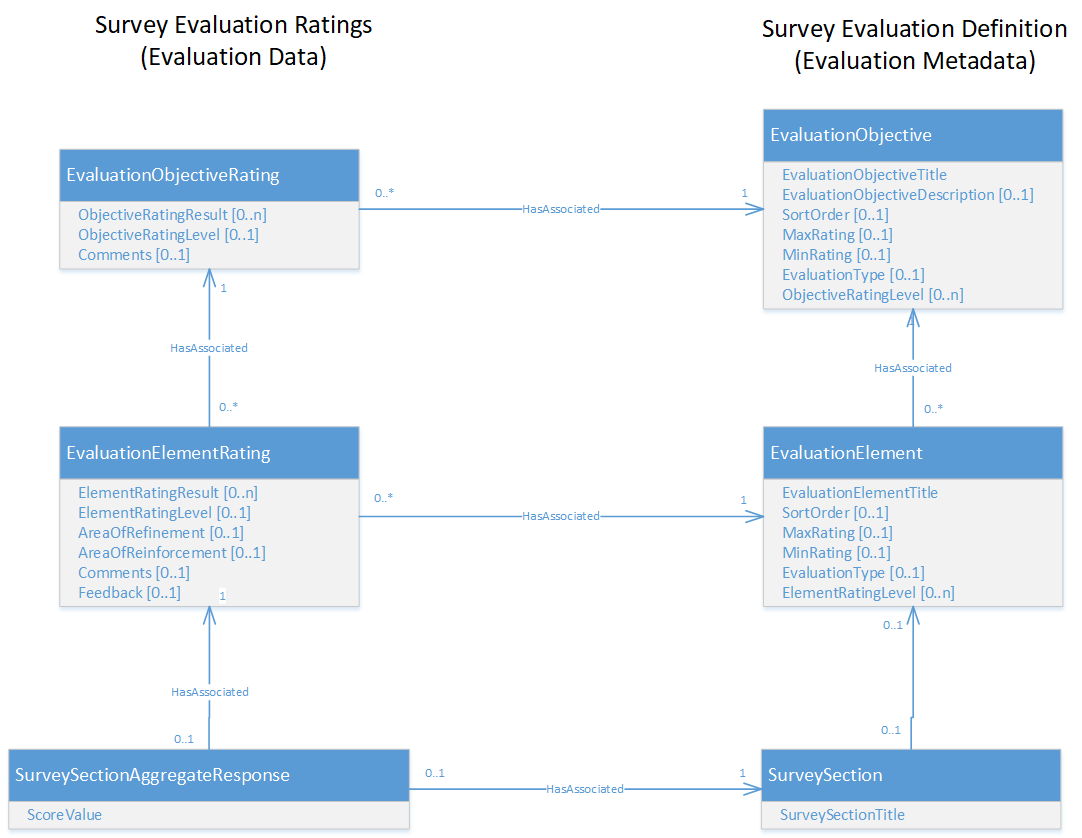

A survey evaluation uses the following entities:

Figure 9. Survey Evaluation model

This article describes the Performance Evaluation Domain for the Teacher Preparation Data Model, provides background and rationale, and offers guidance for its application in the field.

Drivers for the Performance Evaluation Data Model

The TPDM Work Group identified the need to capture performance data about Teacher Candidates and Staff teachers to drive the analysis of Educator Preparation Program (EPP) effectiveness and improvement. The need spans capturing formal performance evaluations to more informal classroom observations.

The requirement is to support the following types of evaluations:

- By a supervisor, peer, coach, typically using a rubric

- Based upon a quantitative measure(s), like student growth or teacher attendance

- Based upon aggregated responses to a survey, like a student survey or peer survey

- Based upon ratings entered without the details of how it was derived

Performance Evaluation

The Performance Evaluation Domain is organized as a four-level, hierarchical model as depicted below:

Figure 1. The Performance Evaluation model hierarchy

The model includes entities that describe the evaluation (i.e., the metadata) and the ratings for each component of the hierarchy for an individual. The model supports the weighted average of the various evaluation components as they averaged up the hierarchy.

Figure 2. Evaluation Rating and Metadata levels

The definitions for the Metadata levels (and associated Evaluation Rating levels) are as follows:

- Performance Evaluation. A performance evaluation of an educator, typically regularly scheduled and uniformly applied. Comprised of one or more Evaluations.

- Evaluation. An evaluation instrument applied to evaluate an educator. The evaluation could be internally developed or could be an industry-recognized instrument such as TTESS or Marzano.

- Evaluation Objective. A subcomponent of an Evaluation, a specific educator Objective, or domain of performance that is being evaluated. For example, the objectives for a teacher evaluation might include Planning and Preparation, Classroom Management, Delivery of Instruction, Communication, Professional Responsibilities, and so forth.

- Evaluation Element. The lowest-level Element or criterion of performance being evaluated by rubric, quantitative measure, or aggregate survey response. For example, the criteria for a Delivery of Instruction objective may include Elements like Organization, Clarity, Questioning, and Engagement.

The evaluation of each Evaluation Element results in a Rating, for example, a five-point scale where 1 is poor and 5 is excellent. Weighting of criteria can be accomplished by having different rating scales.

Ratings may be labeled with a Rating Level, for example, 1: Does not Meet Standard, 2: Improvement Necessary, 3: Effective, 4: Highly Effective.

The model supports the following types of evaluations, which can be mixed and weighted together:

- Rubric

- Quantitative measure

- Aggregate response to a survey

- Evaluated without an (entered) criteria

The PerformanceEvaluation model UML is shown below. Key items of note:

- PerformanceEvaluation entities are associated with Person, since Evaluations can be associated with the different person-role entities, specifically Staff, Candidate, and Student.

- The two columns of entities at center in the UML diagram reflect a Person’s evaluation ratings and the evaluation metadata, as described above.

- For educators who are evaluated across one or more Sections, an optional association is provided from EvaluationRating to Section.

- The entities at the bottom represent to basis for rating the EvaluationElement:

- Rubric is defined by RubricDimension with the rating in the EvaluationElementRating.

- Quantitative measure defined by QuantitiveMeasure and recorded in the QuantitativeMeasureScore.

- Survey response defined by the SurveySection (which indicates a collection of SurveyQuestions) with the responses tallied in the SurveySectionAggregateResponse.

- A Goal entity is defined to record and track performance goals related to an EvaluationElement.

Figure 3. The Performance Evaluation model

Using the Performance Evaluation Model

This section illustrates how the data model is used for the various types of evaluation.

The following diagram illustrates how a rubric is mapped to the data model:

Figure 4. Rubric definition mapping

A rubric-based evaluation uses the following the entities:

Figure 5. Rubric-based Evaluation model

The following diagram illustrates how a quantitative measure, such as a Value-Add Student Growth Measure, is mapped into the data model:

Figure 6. Quantitative Measure mapping

A quantitative measure uses the following the entities:

Figure 7. Quantitative Measure evaluation model

The following diagram illustrates how a survey response, such as a student survey, is mapped into the data model. Different Survey Sections would be mapped to different Evaluation Elements.

Figure 8. Survey Evaluation mapping

A survey evaluation uses the following entities:

Figure 9. Survey Evaluation model